If you haven’t read Part 1 of this blog yet, you may want to start there to get setup: Want to Drive Expansion and Upsell at Your Startup? Try SQL

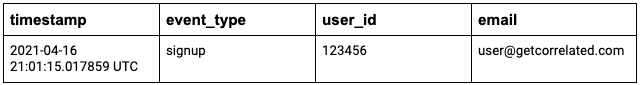

Alright, enough background! Let’s get our hands dirty. We’re going to assume that you’re already connected to a database, and you have access to a table called “user_events” that has the following schema:

This type of data is what we call “time-series” data, and it’s a really common way to describe product usage.

You can use `\d [table_name]` to get the schema for this table in PostgreSQL.

How to Write Your First SQL Query

When you want to query a SQL table, you need to tell the database a couple things - (1) what action you want to do, (2) what attributes (or columns) you want to include in that action, (3) which table you are looking at, (4) what entities (or rows) you want to include in that action.

The only “action” you should ever really do is “SELECT”. This is because you should only be requesting data from the database, never modifying it!! The SELECT statement tells the database that you want it to return some data.

Let’s go ahead and query our table using the following:

SELECT timestamp, event_type, user_id, email

FROM user_events

LIMIT 1000

You’ve essentially told the database - I want to SELECT some columns. The columns I want to select are timestamp, event_type, user_id, email. I want to select these columns FROM the user_events table. I also don’t want the database to pull all the entities (or rows) so I’m going to ask it to LIMIT the pull to just 1000 entities. What’s important to note here is that there is no order here, so don’t expect LIMIT to order the entities that it is returning.

If you have any familiarity with SQL, you might be wondering why we didn’t just use SELECT *

FROM user_events

LIMIT 1000

The reason why you do not want to do this is because depending on the database you’re using, this could be a very very expensive query. In PostgreSQL, adding LIMIT 1000 typically prevents this query from being expensive, but if you’re using something like BigQuery, you’ll end up reading the entire table. This is expensive (not just computing wise, but cost wise) and will make your engineering team unhappy. To avoid that, try to avoid using SELECT * when possible!

Ok, so with the above query, you’re able to see 1000 events that users have done in your product. Awesome!

1. See which users logged in in the last week

As we discussed, the events you pulled are not ordered or filtered, so really the only reason to run the above query is to get a look at what the data looks like. `\d` only shows you the names of each column / attribute - it doesn’t show you what the data actually looks like. That’s really not too useful if you want to figure out which users to reach out to! Instead, perhaps you want to get a list of users who signed up in the last week and use that list to create an outreach campaign. In order to do that, we’ll need some more advanced SQL!

SELECT timestamp, event_type, user_id, email

FROM user_events

WHERE (now() - interval '7 days') < timestamp

AND event_type = 'login'

ORDER BY timestamp desc

Here, we are again telling the database that we want to SELECT the columns / attributes timestamp, event_type, user_id, email FROM the user_events table. The WHERE clause is used to tell the database what entities / rows to include in the result based on the values each entity has for a given attribute. In our WHERE clause, we tell PostgreSQL to do some timestamp math. NOW() is a PostgreSQL function that returns the timestamp right now. Interval ‘7 days’ subtracts 7 days from the time right now. Then, PostgreSQL compares the value each entity has for the timestamp attribute to that value. If the timestamp attribute is greater than the timestamp 7 days ago, the entire entity will be included. The AND clause tells PostgreSQL that we also want to filter by the event_type attribute, and we only want entities where the event_type attribution is a signup. Finally, we order the results using the ORDER BY clause so that we can see the most recent events first. This ultimately gets us a time-ordered table of all the signups that occurred in the last week.

2. Get a deduplicated list of emails to send outreach campaigns to

The above query has a severe limitation when it comes to going from that list of events to a list of emails you can use for outreach because a user might have logged in multiple times in the last week. That means that you’ll have the same email showing up multiple times in the table. Some people just export things into Excel and de-duplicate there, but we’re advanced SQL users!

SELECT DISTINCT email, event_type

FROM user_events

WHERE (now() - interval '7 days') < timestamp

AND event_type = 'login'

Here, we are telling the database that we want to SELECT email and event_type. DISTINCT is used in front of email here to tell the database that we only want distinct emails. I included event_type because it’s useful to make sure you wrote the query correctly, but it’s not necessary. We are still querying from the same table and using the same WHERE clause, but since we don’t want a list of events anymore, we’ve removed the timestamp and ordering. This will get you a list of unique emails who logged in in the last 7 days.

3. See which users logged in more than 5 times in the last week

Let’s go a little crazier, and try to build a smarter list of users to reach out to. Just reaching out to everyone who logged in might result in sending out thousands of emails. How about reaching out to users who logged in more than 5 times a week?

SELECT email, COUNT(DISTINCT timestamp)

FROM user_events

WHERE (now() - interval '7 days') < timestamp

AND event_type = 'login'

GROUP BY email

Here, we’ve used the COUNT() function to tell the database to count all the unique timestamps that match the filters we’ve created. We use GROUP BY to tell the database to count timestamps for each email. Essentially, we are grouping the count by email. This will get you a table of all emails with the number of times they logged in.

You’re probably wondering why we didn’t filter out the table for users who logged in more than 5 times. This is because you can’t add the COUNT() to the WHERE clause, since your WHERE clause impacts the COUNT(). That’s some circular logic right there.

SELECT email, COUNT(DISTINCT timestamp)

FROM user_events

WHERE (now() - interval '7 days') < timestamp

AND event_type = 'login'

GROUP BY email

HAVING COUNT(DISTINCT timestamp) > 5

Intead, you have to use the HAVING keyword to use the aggregate function as a filter. There we have it!

A quick introduction to JOINs

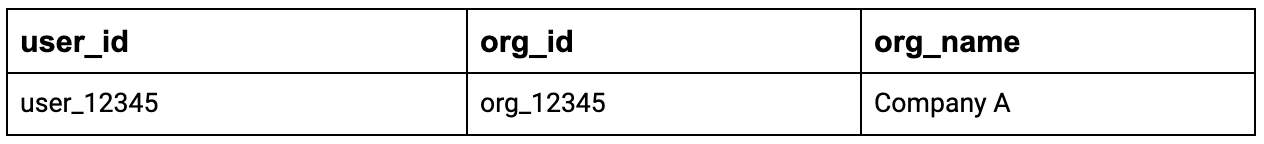

JOINs are a really powerful concept, but are also very prone to errors if you aren’t careful. The JOIN clause essentially tells the database to take two tables and combine them. The database will combine the two tables if the attributes / columns for an entity in one table matches the attributes / columns for an entity in the second table. You want to use JOINs when you need “meta data”. What is that? Well, if you remember our user_events table earlier, that’s a time-series table with timestamps. Meta data is not timestamped, and instead describes an object. For example, you might have a meta data table that tracks which organization a user is a part of. Let’s introduce a new table here, the “organizations” table.

The organization table basically maps a user back to the organization they are a part of. You might be wondering why we wouldn’t just add the org_id to the user_events table. Well, that’s certainly potentially how your organization has done it - but often, organizations choose not to do that because meta data is more complex than just an org_id, and if you imagine the millions of potential events coming in every day, tagging every single event with a bunch of meta data is how you rack up data expenses.

4. Get a list of all users who logged in from Company A

Let’s say you want to reach out to all of the active users from Company A to invite them to a training. You can’t just use the user_events table because the user_events table doesn’t contain the organization name. You’ll have to use the user_id as a “foreign key” to find the associated organization_name in the organizations table. A foreign key basically points to another attribute / column in a different table.

SELECT email, COUNT(DISTINCT timestamp), org_name

FROM user_events

JOIN organization ON user_events.user_id=organization.user_id

WHERE (now() - interval '7 days') < timestamp

AND event_type = 'login'

AND org_name = 'Company A'

GROUP BY email, org_name

HAVING COUNT(DISTINCT timestamp) > 5

Let’s talk about the JOIN clause first. Essentially, the database will combine the user_events table with the organization table by comparing the values in the user_id column in both tables. The ‘.’ syntax basically tells the database - compare the user_id column from the user_events table to the user_id column in the organization table. You need the ‘.’ syntax when two columns in different tables have the same name. In the SELECT statement, you tell the database what columns you want to include in the results. These can come from either table. If you have a repetitive column name, you’ll have to use the ‘.’ syntax to tell the database which column to use. You’ll see that we added a filter for org_name, and we had to group by org_name, since we’re now counting distinct timestamps for all emails in a certain organization.

One thing to note is that the reason why JOINs are difficult is that you have to be smart about how to handle duplicates. As you saw with the GROUP BY clause, if you have someone with the same email, but two different organizations, you’ll get two different values. Also, JOINs have different types - LEFT, RIGHT, OUTER, INNER which do different things depending on if a row from one table had a match in another table. All this is to say, be wary of JOINs and make sure to double check your work.

What’s Next?

SQL is powerful, but also incredibly complicated. We only touched the very surface of what is possible with SQL. That being said, as a member of a revenue team, your job is to drive customer success, increase sales, and find expansion opportunities. At Correlated, we’re building a tool that lets you create the lists that we built earlier (and much much more) without SQL.

We convert your criteria for these lists into Signals that fire off to downstream applications like Slack, Salesforce, and Outreach whenever a user matches those criteria. It’s completely hands free and also really easy to adjust so that you can test all your cool new ideas on what makes up an interesting customer. If you’re interested in getting the insights we walked through above without having to write SQL, check us out!

.png)

.jpg)